Real or fake concept represented on two opposite direction signs

Real or fake concept represented on two opposite direction signs

New scam thanks to AI voice cloning via smartphone

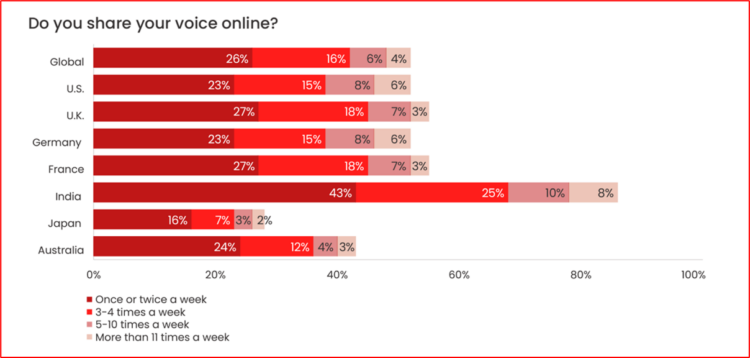

4. July 2023 Published by Raphael DoerrArtificial intelligence is on the rise: Hardly a day goes by without reports in social media about new, supposedly helpful AI tools. Sophisticated AI can now create entire pop songs, take deceptively real photos, and even imitate human voices. The latter is now being exploited by scammers, who are using cloned voices to call people on their smartphones. Every person’s voice is unique, the spoken equivalent of a biometric fingerprint. Therefore, hearing someone speak is a widely accepted way to establish trust. However, with 53% of adults sharing their voice data online at least once a week, such as via social media, voice memos, etc., voice cloning is now a no-brainer for professionals.

As reported by the newspaper Ruhr24, AI voice generators are used to replicate the voices of friends or family members – such as one’s own children. The relatives are then called on their smartphone by an AI-generated voice that is virtually indistinguishable from that of the real child or friend. As with the well-known grandchild trick, the victims are then asked for money to help out of a supposed emergency. The concerned relatives are often persuaded by the deceptively genuine clone voice and enticed to transfer money to the scammers. The Washington Post first reported on the new AI scam in March. According to the report, it has been in circulation in the USA for some time. According to a report by Sat.1, aggrieved Americans have already lost a total of several million euros.

Security experts warn

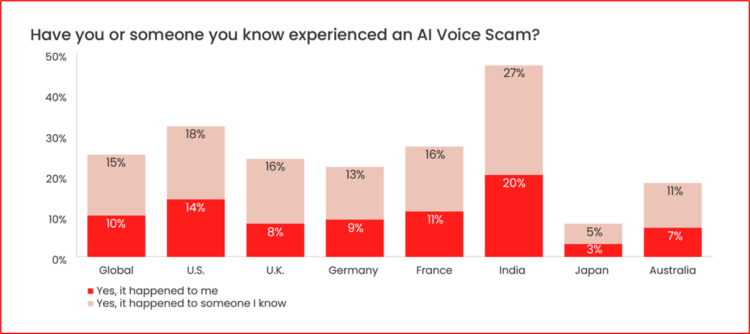

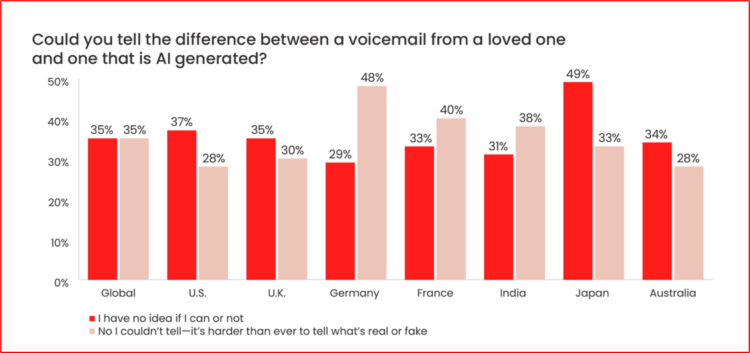

McAfee security experts devoted an entire report to this scam in late May. The research shows that scammers use AI technology to clone voices and then send a fake voicemail or call the victim’s contacts to pretend to be in distress. 70% of adults aren’t sure they could tell the cloned version from the real thing, so it’s no surprise that this technique is gaining steam.

Nearly half (45%) of respondents said they would respond to a voicemail message claiming to be from a friend or loved one who needed money, especially if they believed the request was from their partner or spouse (40%), a parent (31%) or a child (20%). Parents over age 50 were most likely to respond to a child (41%). Messages claiming the sender had been in a car accident (48%), been robbed (47%), lost their phone or wallet (43%), or needed help while traveling abroad (41%) were the most likely to respond. Cyber experts are certain that this type of AI rip-off using fake voices will continue to grow in the near future. According to their survey, more than 20 percent of Germans have already come into contact with an AI-faked voice at some point – for example in social media.

AI Voice Cloning is the name of the technique used for this. It is a so-called deepfake technique that analyzes and then clones human voices. The AI only needs a short voice excerpt, which can be found everywhere nowadays in social media, voice messages and the like. There are already numerous corresponding AI voice cloning programs that are capable of faithfully imitating voices. Some can even be downloaded quite easily from the popular app stores. The technology is already being used in the film and games industries, as well as for social media content.

Comments

Comments

en

en